Cities & URL structure

The following would be the optimal URL progression. This is an example based on keyword research. It is not the final output the websites structure must have. It can include other variations for other considered keywords but the order of the flow must be respected as detailed below. That is the important aspect. We can include as many keyword variations as we find convenient for our personal interests but we must respect the structure depicted here. It is advisable to include geographic targeting when we find variations that justify this.

www.thirtythreeweb.co.uk/tax-jobs/

www.thirtythreeweb.co.uk/tax-jobs/london-tax-jobs

www.thirtythreeweb.co.uk/tax-jobs/norwich-tax-jobs

www.thirtythreeweb.co.uk/tax-jobs/manchester-tax-jobswww.thirtythreeweb.co.uk/tax-recruitment

www.thirtythreeweb.co.uk/tax-recruitment/london-tax-recruitment

www.thirtythreeweb.co.uk/tax-recruitment/norwich-tax-recruitment

www.thirtythreeweb.co.uk/tax-recruitment/manchester-tax-recruitment

Same pattern for all relevant variations based on prior keyword research apply.

Website Layout Issues

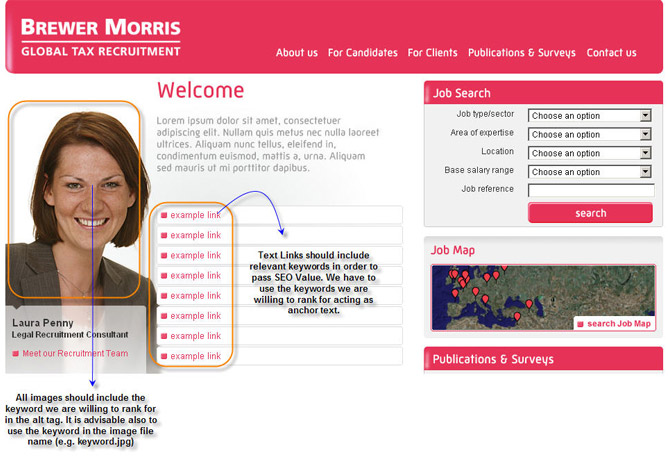

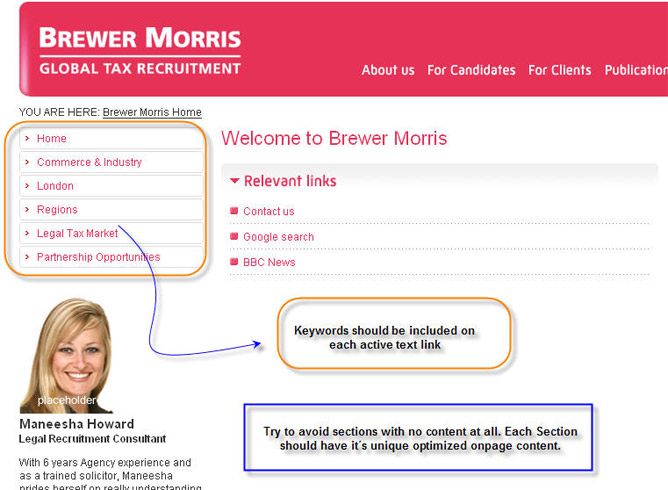

Use H1 tags on each section with pertinent keywords. Instead of using an image based header we should include H1 Tags including the keywords we are trying to rank for on each section. This will alert spiders combined with other factors about the specific contents of each page.

Title tags should always include main keywords. Do NOT use the same title tags on different sections. Try to make each section as unique as possible. When targeting more than one keyword on each section, prioritize the order of your keywords in the title tags according to your ranking interests.

In the navigation bar and buttons do NOT use image based links. Use active text links including the main keyword for each section as anchor text.

When working with images, ALWAYS use the alt tag with your most representative keyword. Actually, this will be the keyword that spiders will index for that image.

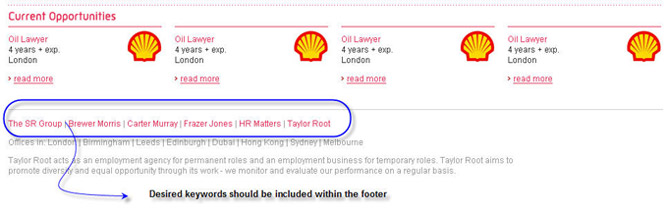

Within each website section include in the footer as many deep links to other sections as you can rather than always using the generic Home Footer. For instance, use different footer links on each page instead of always using the same links over and over again. This will enable spiders to gradually crawl and index your website entirely rather than just top level sections. This aspect will also be reinforced by providing deep incoming links from other websites.

Website Coding

Meta elements are intended to provide unique descriptive informative data summarizing the key word target and theme for each page. We are directed by the search engines to make the descriptions as narrow in scope as possible.

Try to use Java Script or Dynamic Programming to its minimum extent. Search Engines spiders like static contents and plain HTML or PHP programming.

Although it is not one of the most relevant variables, code validation is also another component in the total equation. By having your code validated and W3C compliant you make it easy for spiders to crawl your website and its contents. This is supposedly rewarded with better contents indexation from Google´ s perspective

Validate your code here:

XHTML Validation: http://validator.w3.org/

CSS Validation: http://jigsaw.w3.org/css-validator/

Try to minimize usage of character or contents that may confuse search engine spiders. Keep your code as clean as possible. Search engine spiders will come to your website and try to determine what your website it is about by analyzing on-page factors. If the content they read on your code, is not clearly defined or mixed with other symbols and characters the spiders may find it difficult to determine what your website is really about.

Advanced On-page Optimization – LSI & SILO structures The Latent Semantic Indexing

The LSI and SILO structures are regarded presently as the future of SEO on-page optimization. Google is known to have patented different technologies that make extensive use of LSI and results have shown that this technology is already operating on organic results these days.

So what is LSI?

LSI stands for Latent Semantic Indexing.

LSI allows a search engine to determine quickly the relevance of a website outside of the actual search query that a user would type in to search for content.

For example a website about dogs may naturally have content about dog training, canine obedience, dog history and German Shepherds.

LSI adds a new process when indexing a website. Not only are the keywords considered but the entire content of the website is considered as well. The whole site is now considered instead of just single keywords on a single page.

LSI determines whether a site has other terms that are semantically close to the queried keyword. This means that the algorithm is able to determine good content by the natural occurrence of other keywords within the website that are similar (semantic or semantically related) to the queried keyword. LSI lowers the effect of documents that are semantically distant.

There has been a massive amount of research that has gone into this algorithm. It is worthy of note that this simple method of determining semantic closeness to a queried word is surprisingly close to how an actual human would classify a document as being relevant. Yet it is an advanced automated process being conducted by thousands of computer servers.

Documents, which are seen as semantically close, tend to rank surprisingly higher than documents that do not use semantics to cover their theme. LSI is the closest the search engines have come to mimicking human thought.

Why is Google paying much attention to LSI and implementing it? Remember we talked about scrapers earlier?

LSI has the net effect of producing more relevant results for searchers. The days of search engine algorithms using single keyword matches are coming to a fast extinction. The search engines now know that single keyword factors can yield very low quality results by allowing spammers and lazy webmasters to manipulate the rankings.

LSI, in its most basic form, tries to associate words with concepts to ensure relevancy. For example, the words pool and table are associated with the term billiards and not swimming pools and kitchen tables. The words taco and bell are associated with a restaurant chain and not a popular Mexican food or musical instrument.

So what are the implications of LSI for our purposes?

LSI means that by covering the theme thoroughly we will be able to achieve high rankings above most of the sites out there.

So how do we cover our niche thoroughly?

For this purpose, we need to understand what Google thinks of a search term. We need to understand the keywords that Google associates to a search term (correlated keywords, synonyms, etc). By implementing later in our blueprint (unique contents) these keywords all along the website on each corresponding section we will be in an optimal situation. By covering a wide semantic net (covering keywords and related keywords and synonyms of those words) you will rank higher in your niche.

How is the design of the website involved in the process?

A website needs to be properly structured to take full advantage of LSI. Designing your site and your pages using synonyms and correlated keywords will yield higher quality content naturally. You will also be properly communicating the theme of your site to the search engines and will rank higher for your keywords.

What does LSI mean in the practice?

In the practice, LSI will be useful for developing our blue print and keyword-rich contents on each section within our silos structures. By making exhaustive usage of all the keywords that semantically have sense for Google on each case, we will be able to build highly SEO optimized content rewarded by Google.

Let´ s make a semantic search example for the keyword apple. This is achieved by performing the following search on Google: ~apple

Have a look at the related keywords that occur in results:

- Apple Computer

- QuickTime

- Apple’s

- Windows

- Computer

- Mac

- iMac

- G4

It is easy to observe that in no moment Google understands in this case the keyword apple as semantically related to the fruit. Instead, it is associating it to computer related stuff.

So LSI enables us to understand the semantic logic that Google is looking for when analyzing our content and assigning a value to it.

What are SILOS?

As we develop the overall theme of the site, as a whole, we will start to creep upwards in rankings for very competitive keywords by concentrating on individual sub-themes that include less competitive keywords within your site. By concentrating on the less broad keywords (long tail) in your sub-themes, you will begin to rank for broader and broader keywords by covering your sites overall theme at a very deep level.

This will be done through strict organization of your site into themes or silos and then including synonymically related keywords within the content of individual pages within each silo structure within your site (LSI).

The most basic explanation of a silo is to keep all related information inside of one folder. By generating different SILOS we will be able to cover our niche with maximum detail for all the relevant keyword variations in different levels.

Developing your Blue Print

Now that we know what LSI means, how we can use it for our benefit on SEO projects? How we are supposed to structure the website based on our SILOS structure? It is time to design our own blue print.

This process will start to create our own specific content for each specific keyword within each sub-theme on its respective silo structure. But this content will not have a random nature. We already know how to identify by usage of LSI, semantically related keywords. So it is time to use this information.

Once you initiate your writing for each specific section, you will have to use semantically related keywords (in Google´ s understanding as explained before) as well as available synonyms. Generating contents this way determines your blue print and you will be able to populate your website with highly optimized & top-notch content from an SEO perspective.

Short-term requirements may not allow a website owner to dedicate so much time to silo structuring and content creation. There are also budget constraints based on the paid services to work on SILO & LSI structures.

Last but not least, though it is the optimal approach regarding SEO on-page factors, the LSI & Silo structuring is highly demanding on unique content volumes. Remember that you must apply LSI on each section so this will be a time consuming process to develop the best possible blueprint.

As we can easily observe, it is by no means a unilateral decision. In the end, it all comes to short-term against long-term requirements as well as budget availability when working with an independent consultant.

SEO Basic Concepts

These are the basic SEO concepts for what really matters. The idea is replicate these concepts over and over again on each of the website for all the different keywords we are targeting.

Title Tags: Always use the keyword you are targeting in your title-tag. If I am targeting more than one keyword in that particular section then I will put in first place the keyword that has higher importance and then place the others in descending order of importance.

Meta–Tags: Always use in your meta-keywords tag the specific keywords and important variations that you are targeting. This needs to be done in each section For the meta-description tag always use a concise and keyword rich description of what you are promoting in that section. This will be the description that search engines´ spiders will index and show as the description on the SERPS.

H1, H2 Tags: Always include your desired keywords for each section in H tags acting as headers. Instead of just inserting an image with the keyword do insert an H1 tag that includes the main keyword you are targeting in that section.

Always include an About US, a disclaimer, a contact us & privacy policy in your website. This will provide higher levels of trust to your visitor and also to Google thus enabling better positions.

If possible, have your code validated. Although it´ s importance in the general ranking algorithm equation is not that much significant compared to other variables, it helps. If having your website´ s code validated and W3C compliant is a realistic option, then do not hesitate to embrace it.

Do NOT copy content from other websites. DO NOT use exactly or almost the same content on different pages within your website. Google works with a duplicate-content filter thus decreasing your websites value and even penalizing you if it finds extensive usage of duplicated content. Google likes unique original content so it is always better to work under their rules. It is easier to scrape content but its benefits will not last in the long term. Generating unique quality content for your specific niche is paramount to success.

Do NOT get links from link farms, blog farms, low-valued websites, banned domains. Try to work according to Google´ s guidelines.

Always use the keyword you want to target in a specific section as anchor text when receiving links from other websites. In order to increase your rankings for that keyword on search engines you have to make those links count. That means you need to use that particular keyword as the anchor text in the text link.

Have your keyword density for each section between 3 to 7 percent. This means that you have to keep the content-density in this range on that particular page in that level for the keyword to register with the indexing spiders.

Always include your most relevant keywords for each pertinent section within the footer.

Have a complete sitemap that will enable each Search engines spider to crawl your website in entirety. Link to each section of your website using the keyword you want to rank for as the anchor text as the link to each section. When updating your website, submit your XML sitemap version to Google´ s Webmaster Central for indexation purposes.

Each time you use an image use the alt tag to describe the picture to the search engine spiders. It is important to include the most relevant keyword for that image. Search engine spiders do not classify images as content. In order to have the images indexed this must be done. Do it in a way that it includes your main keyword thus providing value in terms of SEO.

Page Rank

Page Rank is another measure created by Google that measures somehow the value of your linking structure and website as a whole. Many times it has been discussed the real importance of the Page Rank when determining results on Google. Some argued that is a vital variable that commands the whole nature of results whereas others diminish its value to the extent to make it insignificant. What matters for sure is what results have extensively showed us on time: Page Rank is just another important variable in the total equation that combined with the other ones provides the final output. Page Rank by itself is not the key but it is a relevant component. So we have to focus on working in all the aspects.

Yahoo focuses mainly on site-wide links and on-page factors. It is all about volume and usage of proper anchor text as well as basic SEO on-page optimization. The nature and quality of the links have no impact here. We are talking about volume and anchor text as their main focal points.

MSN pays particular attention to updating frequency. The more often you update your contents the more often you will get visits from MSN´ s spider. The MSN engine is particularly fond of rewarding websites with high updating frequencies and proper optimizations of on-page factors Page Rank, authority, etc have no importance here. It is all about links and contents updated regularly above all.

In the practice, this just determines that Yahoo & MSN are much easier to spam than Google. Hence, achieving good results in Google is the key to ultimate success since it will bring the most interesting levels of traffic as well as good rankings on the other engines; especially Yahoo.

Up to this point we know how search engines work and what we are supposed to give them in order to see our results boost. Let’s take a further look into how to optimize.

Anchor Text Optimization

Anchor Text or Link label is the visible, clickable text in a hyperlink. Anchor text (text of the anchor) is the text a user clicks when clicking a link of a web page. The text that composes a link textutal, that is the one comprised between tag the HTML (still) of opening and the respective one tag of closing () The contained words in a link textual can contribute to determine the page towards which link the tip.

Anchor text is weighted highly in search engine algorithms, because the linked text is usually relevant to the landing page. The objective of search engines is to provide highly relevant search results; this is where anchor text helps, as tendency is, more often than not, to hyper link words relevant to the landing page.

Optimization occurs when formatting key words as links or describing the links. The keywords being used need to describe the content on the page being linked to. Anchor text optimization is very important because search engine spiders will detect the text that is used as an active text link and will therefore assume that the website provides true value for that specific keyword. This basic variable in SEO strategy is often not considered by many webmasters thus reducing the results of their link building efforts. Links will have little or no value if they are not using the descriptive keywords that we want to rank for.

So if I want to rank for the keyword tax recruitment, I will need to have a considerable amount of incoming quality links from other websites that are pointing to our site with that precise keyword acting as anchor text. This means that the active text link will display tax recruitment as the text link. Search engine´ s spiders will recognize this and therefore assign value to our website for that keyword thus increasing our chances of better results within SERPS (Search Engine Results Positions). Same reasoning applies to each section using its corresponding keyword.

Link Popularity

Link Popularity is a measure of the quantity and quality of other web sites that link to a specific site on the World Wide Web. It is an example of the move by search engines towards off-the-page-criteria to determine quality content. In theory, off-the-page-criteria adds the aspect of impartiality to search engine rankings. Link popularity plays an important role in the visibility of a web site among the top of the search results. Indeed, some search engines require at least one or more links coming to a web site, otherwise they will drop it from their index

Types of links:

Reciprocal link is a mutual link between two objects, commonly between two websites to ensure mutual traffic. Example: Alice and Bob have websites. If Bob’s website links to Alice’s website, and Alice’s website links to Bob’s website, the websites are reciprocally linked. Website owners often submit their sites to reciprocal link exchange directories, in order to achieve higher rankings in the search engines. Reciprocal linking between websites is an important part of the search engine optimization process because Google uses link popularity algorithms (defined as the number of links that led to a particular page and the anchor text of the link) to rank websites for relevancy.

Backlinks are incoming links to a website or web page. The number of backlinks is an indication of the popularity or importance of that website or page. In basic link terminology, a backlink is any link received by a web node (web page, directory, website, or top level domain) from another web node. Backlinks are also known as incoming links, inbound links, inlinks, and inward links.

Deep linking, on the World Wide Web, is making a hyperlink that points to a specific page or image on another website, instead of that website’s main or home page. Such links are called deep links.

Three way linking (siteA -> siteB -> siteC -> siteA) is a special type of reciprocal linking. The attempt of this link building method is to create more “natural” links in the eyes of search engines. The value of links by three-way linking can then be better than normal reciprocal links, which are usually done between two domains.

One way link is a term used among webmasters for link building methods. It is a hyperlink that points to a website without any reciprocal link; thus the link goes “one way” in direction. It is suspected by many industry consultants that this type of link would be considered more natural in the eyes of search engines

Search engines such as Google use a proprietary link analysis system to rank web pages. Citations from other WWW authors help to define a site’s reputation. The philosophy of link popularity is that important sites will attract many links. Content poor sites will have difficulty attracting any links. Link popularity assumes that not all incoming links are equal; an inbound link from a major directory carries more weight than an inbound link from an obscure personal home page. In other works, the quality of incoming links counts more than sheer numbers of them.

A remarkable aspect here is to understand that in Google the quality and quantity of incoming links is incredibly important. Yahoo & MSN do not apply this filter and it´ s all about volume and content´ s updating frequency regardless of the incoming links growth rate and quality.

Pertaining to Google our strategy needs to be to get as many quality links as possible to our website, but at a steady rate over time.

What does Google look for regarding quality?

Google wants to see incoming links from related websites. The more authority those websites have in the niche we are targeting the better the link score will be. In Google´ s perspective, a single quality link from a related website with some authority in the niche is ten times better than getting 100 links for other websites that have nothing to do with that niche. So in Google, it is all about quality, content relationship and similitude between websites when it comes to links.

What is a steady rate?

The time restriction points to the fact that the link building process has to seem natural. For example if you get 2,000 incoming links from related websites with a high PR ranking and some authority in a 48 hour time period, this will look un-natural. To avoid being flagged by Google you just need to set a steady rate of growth for your incoming links that will not look like an exaggeration. The process has to be constant but logical on time.

This last aspect has been widely discussed in the SEO community since high Link Velocity and incoming link acquisition rates have a big factor in determining Search Engines results

What not to do!

Link spamming is a technique that has been widely used among the Black Hat SEO Community. This technique occurs when one acquires thousands of incoming links to a site daily. This is mainly achieved by allocating links to a site using the RSS. This is also achieved by spamming search engines, guest-books, forums and other traditional black hat resources. The tremendous increase in incoming at such a high link velocity will enable the site to easily surpass well-established competing websites in a short period. This process is shunned by Google and will result in the site being sent back to the Sandbox if it is determined that one is link spamming.

The benefits of link spamming are indeed enormous in the short term, but the website may lose all SEO value if caught. The purpose of mentioning this is to know both sides of the coin so you can utilize this strategy to fight back if you are under the attack of black hat activity from a competitor. Usually most website owners will be outranked really easily by black hat spammers unless you know their methods and can fight back their attacks.

For the purposes and nature of our work, and since we are dealing with a long term commitment to SEO, White Hat methods are the only approach we use.

Domain Age and Authority

Domain Age and Authority concepts that are widely spread in the SEO jargon and they are both correlated. The reason for this is that it is now Google´s policy that each new website has to show its value by providing original useful content to human readers and must be working on its link building structure for a minimum period of time.

While this period of time occurs the website is kept on what is known as the Sandbox and is not assigned any Page Rank. If after this minimum time period, improvements are observed within the website from Google´ s perspective then the site will be assigned a Page Rank and will head on the path towards higher rankings.

Content Authority

A general problem that often occurs on many websites is the lack of unique content throughout the website. Most websites have unique navigation menus and query bars but not the key enough of the key element, which is fresh and unique content.

So suggestions are to:

Categorize each section of your website on mini-niches and provide unique content accordingly to each niche. These content pages MUST bring further value to your visitors. Try to elaborate as much as possible on each topic in each section. Your goal is to turn your website into an encyclopaedia populated with original, targeted & quality unique content.

When working on each page, try to make it keyword-rich on a selected keyword(s). This means that you must have a keyword density of 3% to 7% for the target term. Remember to bold and sometimes even underline the target term. This will indicate to search engine spiders (combined with other SEO factors) that those are the keywords you are weighting in that particular section.

Have your website organized in a way that with each new step on different levels you are getting more and more into specific content. If you are always redirecting on multiple sections of your website to the same pages you are not providing the navigational flow that Google expects from a quality website. Instead, try to dig deeper into each section when providing links. Long tail or very specific keywords should be used for this purpose. This will make Google index your website as an authority content source provider in the niche by covering all relevant aspects & variations thus enabling your website to be established as a top authority in the field.

It is extremely important to avoid duplicate content issues. Each section should produce its own unique content. If we repeat the same content replacing only the name of the cities or we may be vulnerable to duplicate content penalizations. This actually occurred to one of the most important travel & booking websites on the Internet. Its Latin American branch was just using the same rough content on several sections just changing the target keyword. The trick was quickly noticed by Google and severe penalizations to their website were applied.

The same idea applies if we are just getting the information for each city from an API or a public resource: it is NOT unique content. So if that is the only option (instead of working on unique copyrighted in-house produced content) I suggest that we mix the formula by injecting some of our own content (will have to be hand made) among public data scraped contents.

How Search Engines Work

The variables that are considered to be important by Search Engines when ranking a website:

- Domain Age

- Title

- Meta-tags

- Unique Content

- Keyword Density

- Anchor Text

- Anchor Text Percentage (as a percentage of all links)

- Domain Page Rank

- Back-links Page Rank

- HTML Validation mistakes

- Back-links (as a proportion of all links)

There are two main functions that must be addressed when working on SEO. The first function covers with on-page aspects such as contents, keyword density, site-map layout, meta-tags, title-tags, header tags and alt tags. The other part of the formula deals with incoming links distribution and nature along the website and it is known as off-page optimization. By nature, both of them are a necessity for achieving high-ranking results.

On this study we will assume, that we are focusing our efforts towards Google, Yahoo & MSN (in that order) optimizations since they are the most important Search Engines in terms of traffic and associated revenue. Our purpose is to set a complete optimization plan having in mind how these search engines behave.

We now know the main variables of SEO, but how does each of the above mentioned Search Engines weight each variable? It is imperative to understand what each Search Engine values most when it refers to Search Engine optimization. This will not only allow us to understand the actual results on search engine results pages (SERPS) for niche keywords, but it will also determine the guidelines for the SEO optimization plan.

If we provide each Search Engine with what the details that they want we can be confident that we will achieve better ranking results. Having said this, the truth is that we will usually work primarily for optimizing the code for Google. Google requirements are the standard that the other engines seek to emulate. We will find that meeting the proper Google requirements better results on the other search engines will follow.

So what does Google value most then?

Google values order:

- Authority in content

- Domain Age

- Anchor Text Optimization & Incoming links levels (Link Popularity) and Page Rank

There is an unknown amount of other variables that the Google algorithm is designed to tested for. It is rumoured that there may be as many as five millions different testing variables. Some other important variables in the general

- Click thru rate on SERPS

- Visitors´ time duration on the website

- HTML validation